iOS Forensics: iLEAPP updates

iLEAPP is written by Alexis Brignoni wrote iLEAPP to parse iOS logs, events, and plists. For part of my thesis--iOS Forensics: Data hidden within Map Cache Files--I extended iLEAPP to parse three different artifacts based around the com.apple.geod application:

- geodApplcations: List of applications who accessed the location cache

- geodMapTiles: Downloaded map tiles from applicaions displaying maps

- geodPDPlaceCache: Places a user examined in an application.

Each of these artifacts are talked about in detail in my blog post linked above and my submitted thesis (PDF).

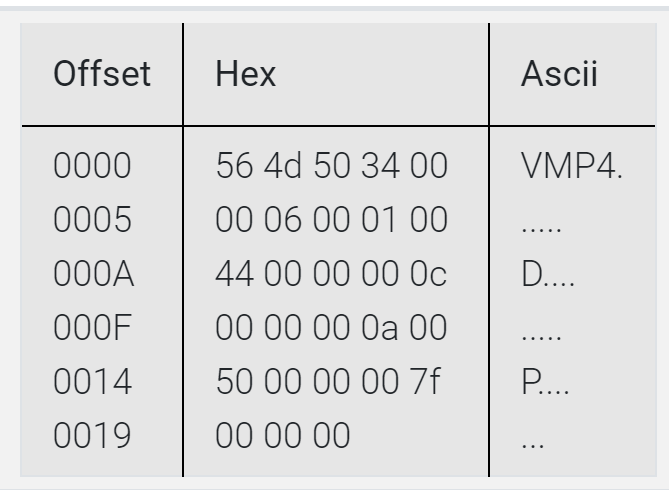

Also, I added several new functions to support showing the map tiles in the report provided by iLEAPP. The major function, generate_hexdump(), provides an output similar to Linux "xxd" command.

By default, I am only showing the first 28 bytes but this can be changed with an argument.

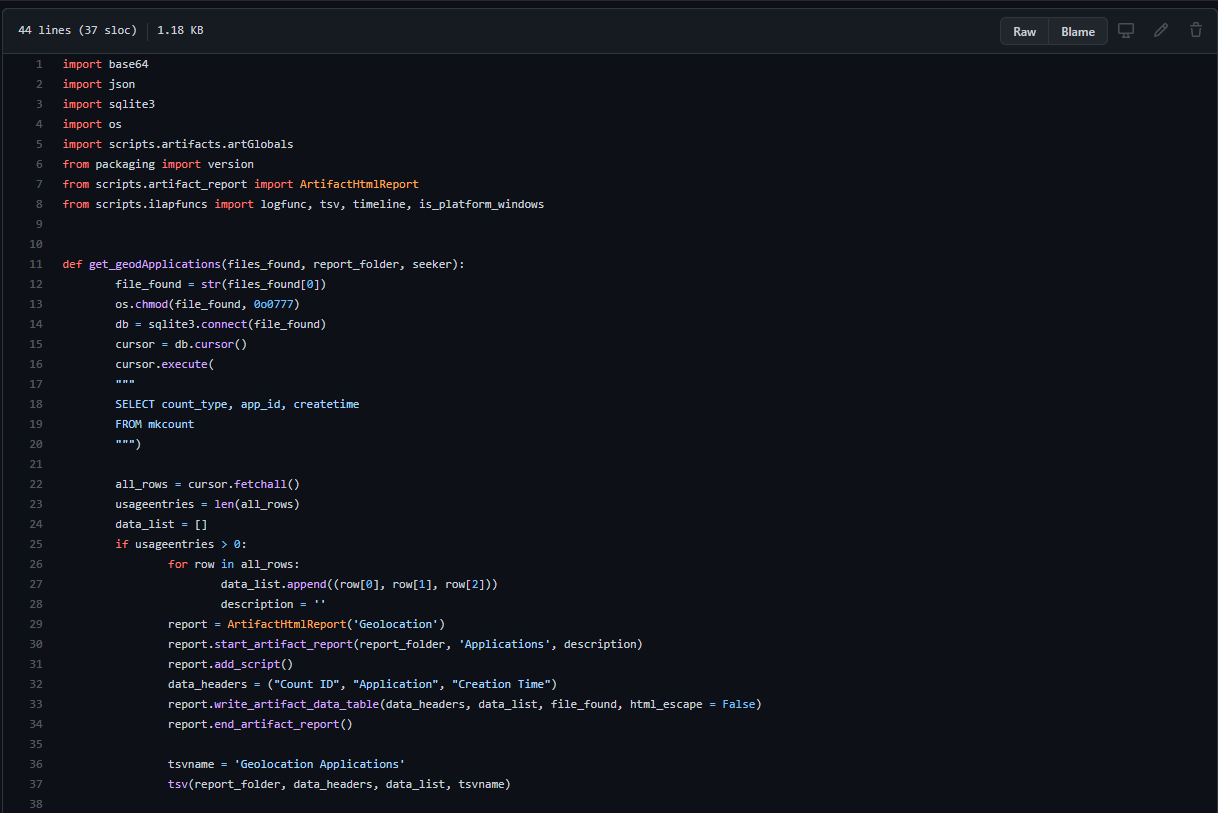

The following code is the changes that I added which were merged in pull request #71.

ilapfuncs.py

''' Returns string of printable characters. Replacing non-printable characters

with '.', or CHR(46)

``'''

def strings_raw(data):

return "".join([chr(byte) if byte >= 0x20 and byte < 0x7F else chr(46) for byte in data])

''' Returns string of printable characters. Works similar to the Linux

`string` function.

'''

def strings(data):

cleansed = "".join([chr(byte) if byte >= 0x20 and byte < 0x7F else chr(0) for byte in data])

return filter(lambda string: len(string) >= 4, cleansed.split(chr(0)))

''' Retuns HTML table of the hexdump of the passed in data.

'''

def generate_hexdump(data, char_per_row = 5):

data_hex = binascii.hexlify(data).decode('utf-8')

str_raw = strings_raw(data)

str_hex = ''

str_ascii = ''

''' Generates offset column

'''

offset_rows = math.ceil(len(data_hex)/(char_per_row * 2))

offsets = [i for i in range(0, len(data_hex), char_per_row)][:offset_rows]

str_offset = '<br>'.join([ str(hex(s)[2:]).zfill(4).upper() for s in offsets ])

''' Generates hex data column

'''

c = 0

for i in range(0, len(data_hex), 2):

str_hex += data_hex[i:i + 2] + ' '

if c == char_per_row - 1:

str_hex += '<br>'

c = 0

else:

c += 1

''' Generates ascii column of data

'''

for i in range(0, len(str_raw), char_per_row):

str_ascii += str_raw[i:i + char_per_row] + '<br>'

return f'''

<table id="GeoLocationHexTable" aria-describedby="GeoLocationHexTable" cellspacing="0">

<thead>

<tr>

<th style="border-right: 1px solid #000;border-bottom: 1px solid #000;">Offset</th>

<th style="width: 100px; border-right: 1px solid #000;border-bottom: 1px solid #000;">Hex</th>

<th style="border-bottom: 1px solid #000;">Ascii</th>

</tr>

</thead>

<tbody>

<tr>

<td style="white-space:nowrap; border-right: 1px solid #000;">{str_offset}</td>

<td style="border-right: 1px solid #000; white-space:nowrap;">{str_hex}</td>

<td style="white-space:nowrap;">{str_ascii}</td>

</tr></tbody></table>

'''geoApplications.py

import base64

import json

import sqlite3

import os

import scripts.artifacts.artGlobals

from packaging import version

from scripts.artifact_report import ArtifactHtmlReport

from scripts.ilapfuncs import logfunc, tsv, timeline, is_platform_windows

def get_geodApplications(files_found, report_folder, seeker):

file_found = str(files_found[0])

os.chmod(file_found, 0o0777)

db = sqlite3.connect(file_found)

cursor = db.cursor()

cursor.execute(

"""

SELECT count_type, app_id, createtime

FROM mkcount

""")

all_rows = cursor.fetchall()

usageentries = len(all_rows)

data_list = []

if usageentries > 0:

for row in all_rows:

data_list.append((row[0], row[1], row[2]))

description = ''

report = ArtifactHtmlReport('Geolocation')

report.start_artifact_report(report_folder, 'Applications', description)

report.add_script()

data_headers = ("Count ID", "Application", "Creation Time")

report.write_artifact_data_table(data_headers, data_list, file_found, html_escape = False)

report.end_artifact_report()

tsvname = 'Geolocation Applications'

tsv(report_folder, data_headers, data_list, tsvname)

else:

logfunc('No data available for Geolocation Applications')

db.close()

returngeodPDPlaceCache.py

import base64

import json

import sqlite3

import os

import scripts.artifacts.artGlobals

from packaging import version

from scripts.artifact_report import ArtifactHtmlReport

from scripts.ilapfuncs import logfunc, tsv, timeline, is_platform_windows, strings

def get_geodPDPlaceCache(files_found, report_folder, seeker):

file_found = str(files_found[0])

os.chmod(file_found, 0o0777)

db = sqlite3.connect(file_found)

cursor = db.cursor()

cursor.execute(

"""

SELECT requestkey, pdplacelookup.pdplacehash, datetime('2001-01-01', "lastaccesstime" || ' seconds') as lastaccesstime, datetime('2001-01-01', "expiretime" || ' seconds') as expiretime, pdplace

FROM pdplacelookup

INNER JOIN pdplaces on pdplacelookup.pdplacehash = pdplaces.pdplacehash

""")

all_rows = cursor.fetchall()

usageentries = len(all_rows)

data_list = []

if usageentries > 0:

for row in all_rows:

pd_place = ''.join(f'{row}<br>' for row in set(strings(row[4])))

data_list.append((row[0], row[1], row[2], row[3], pd_place))

description = ''

report = ArtifactHtmlReport('Geolocation')

report.start_artifact_report(report_folder, 'PD Place Cache', description)

report.add_script()

data_headers = ("requestkey", "pdplacehash", "last access time", "expire time", "pd place")

report.write_artifact_data_table(data_headers, data_list, file_found, html_escape = False)

report.end_artifact_report()

tsvname = 'Geolocation PD Place Caches'

tsv(report_folder, data_headers, data_list, tsvname)

else:

logfunc('No data available for Geolocation PD Place Caches')

db.close()

return